Home Office algorithm to detect sham marriages may contain built-in discrimination

Newlyweds could be deported if flagged as suspicious by a new Home Office algorithm that may discriminate based on nationality, the Bureau can reveal. The system, outlined in an internal government document obtained by the Public Law Project, is built on potentially biased information that also includes the age gap between partners.

The Home Office introduced an automated system to detect sham marriages in March 2015 as part of the “hostile environment” immigration policy. Its current iteration is a triage system implemented in April 2019 and was developed through machine learning, a process by which a computer algorithm finds patterns in source data and applies them to new data. The historical information used in this case came from the marriage referrals received by the Home Office over an unspecified three-year period; any biases that might exist within this source data are likely to be projected forward by the algorithm.

An equality impact assessment (EIA) conducted by the Home Office revealed a number of public law issues with the process, including the possibility of “indirect discrimination” based on age. Campaigners warn that its use of data leaves the process open to similar discrimination around nationality, echoing previous concerns about the systems used by the department in recent years.

“The Home Office data on past enforcement is likely to be biased because Home Office enforcement is biased against people of certain nationalities,” said Chai Patel, legal policy director of the Joint Council for the Welfare of Immigrants (JCWI).

Even in times of crisis we will continue to scrutinise, question and demand responsible decisions and actions from those in power.

To follow our stories, sign up here for updatesLast year, successful legal action was taken by JCWI and the not-for-profit group Foxglove against the “racist algorithm” used by the Home Office for its visa system. The groups argued that, due to its reliance on historical visa data, the automated system perpetuated discrimination against certain nationalities. On suspending the system in August, the Home Office said it would conduct a review of its operation.

The Home Office told the Bureau: “The purpose of the EIA as part of the triage system is to ensure we are able to identify any potential risk of discrimination and assess the impact. It is then considered whether the discrimination is justifiable to achieve the aims of the process.”

In 2020, an independent review into the Windrush scandal reported that the Home Office demonstrated “institutional ignorance and thoughtlessness towards the issue of race … consistent with some elements of the definition of institutional racism”.

It is well established that AI technologies and machine learning often reflect societal biases. As Foxglove director Martha Dark explained, with regards to the visa process: “An issue known to plague algorithmic systems is the feedback loop: if the algorithm refused applicants in the past, that data will then be used to justify keeping that country on the list of undesirable nations, and grade citizens of that country red, denying them visas again.”

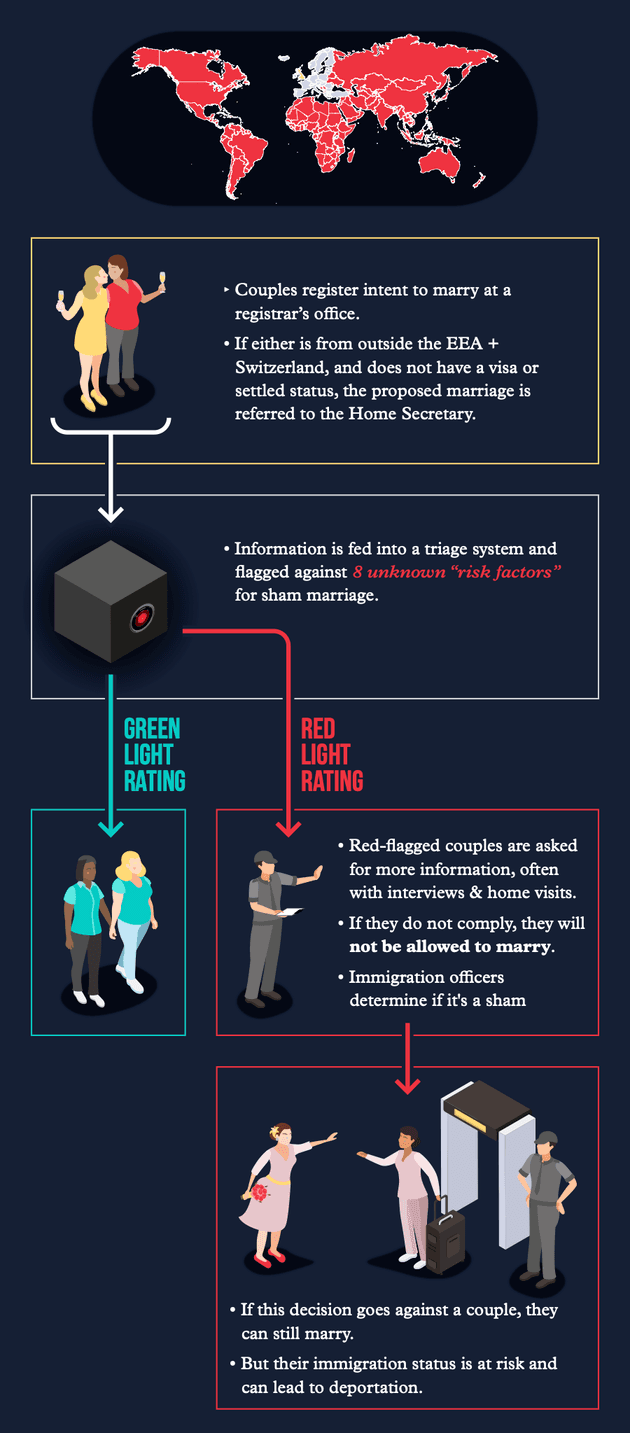

In the case of sham marriages, the Home Office’s triage system comes into play once a couple has given notice with a registrar. Should either or both parties be from a country outside of the UK, Switzerland and the European economic area, or have insufficient settled status or lack a valid visa, the couple is referred to the triage system.

The system then processes their data and allocates them either a green or red light. A red-light referral, which means the couple need to be investigated further, can put a person’s immigration and visa status at risk and could lead to legal action and deportation.

The EIA lists eight criteria the system takes into account when a marriage is flagged for analysis, but this list has been redacted by the Home Office.

A graph included in the document shows the number of marriages that go through the system involving specific nationalities and the percentage of those marriages that are given a red light. According to the graph, the nationalities with the highest rate of triage failure – between 20% and 25% – are Bulgaria, Greece, Romania and Albania. Those most frequently referred to the triage system include Albania, India, Pakistan and Romania.

“There are quite clearly some clients whose marriage is more likely to be investigated than others,” said Nath Gbikpi, an immigration lawyer at Islington Law Centre. “One of the ‘red flags’ is obviously immigration status, but that is clearly not the sole indicator, as I have had clients with the same immigration status – some get investigated while others don’t. I wasn’t surprised to hear that there is an algorithm and I wasn’t surprised to hear that some nationalities are picked on more than others.”

Foxglove’s Martha Dark said: “It is concerning that nationality could be used in a similar way [to how it was used in the visa tool] in the algorithm to detect sham marriages.”

The EIA states that while nationality data is used in the triage process, nationality alone does not determine the outcome. Similarly, age is not a determining factor but someone “in the younger or older [age] brackets” is more likely to be part of a couple with a greater age difference, which is in turn a criterion considered by the triage system.

In order to confirm whether or not the system is discriminatory, further transparency around the underlying data and the eight screening criteria is necessary.

Chai Patel of the JCWI said: “Until there has been a full review of all systems and data, we can assume that any system the Home Office operates is not free from bias.”

The EIA suggests the Home Office has conducted a separate review of the system’s impact on different nationalities but it has rejected FOI requests for more information.

Jack Maxwell, a research fellow at the Public Law Project said: “From what we have seen, the Home Office's internal analysis of these risks was inadequate. The algorithm appears to have been developed and deployed without any transparency or independent oversight.

“The Home Office has so far refused to disclose all of the ‘risk factors’ used by the algorithm to assess a case. In the absence of such disclosure, it is impossible to tell whether those risk factors are contributing to unjustified indirect discrimination.

“The Home Office must allow transparency, independent oversight, and ongoing monitoring and evaluation of its use of algorithms to ensure that its systems are fair and lawful.”

The Home Office told the Bureau: “Those who abuse marriage to enter the UK illegally will feel the full force of the law and it is right that we have a system in place to prevent this. Our triage system is not indirectly biased, and our EIA is there to detect any possible bias while ensuring we are able to investigate and disrupt sham marriages effectively.

Dark called for the details of how this system operates to be disclosed. “The decisions made by these systems can be life-changing – who can travel or get married,” she said. “Yet the public have never been consulted or asked about the use of this system.”

Reporter: Alice Milliken

Desk editor: James Ball

Investigations editor: Meirion Jones

Production editor: Alex Hess

Fact checker: Maeve McClenaghan

Graphic: Kate Baldwin

Legal team: Stephen Shotnes (Simons Muirhead Burton)

Our reporting on Decision Machines is funded by Open Society Foundations. None of our funders have any influence over the Bureau's editorial decisions or output.

Header image: WireStock/Alamy

-

Area:

-

Subject: