Transparency concerns over secret government data projects

Every day automated systems make decisions about us that shape our lives.

A CCTV camera connects to a facial recognition system to judge who should be stopped and searched. A shop terminal connects to a credit reference agency to decide if you can make your purchase. Even decisions about who goes to jail and who walks free are in the hands of algorithmic systems.

But some of the most contentious new uses of these technologies are happening behind closed doors. Experts and campaigners fear that rolling out these systems without public scrutiny risks damaging society, especially if they are introduced before they are properly assessed.

One example campaigners point to is the Information Commissioner’s Office (ICO), a UK regulator set up to promote “openness by public bodies and data privacy for individuals”. It has chosen to support the development of several controversial big data projects without public consultation.

The ICO selected ten projects from a longlist of 64 for a “sandbox” project – a chance for development without regulatory enforcement – trialling expanded use of algorithms in often-controversial areas.

These include concepts with potentially massive ramifications, such as airport facial recognition, a programme to identify those at risk of becoming criminals and a platform to track possible financial crime. Yet the regulator has only released single-paragraph descriptions of the projects, and has refused to give out any further information.

“While this is being presented as a sandbox, the way it is shaping up is more like a blackbox,” said Phil Booth, from the data privacy campaign group medConfidential. “If this is something which is genuinely testing the boundaries of the legislation, I think the regulator that’s supposed to be protecting all of our data should be absolutely clear and up front about what boundaries are actually being tested.”

He said it was unacceptable that the ICO had released only one paragraph about each project. “It’s the lack of clarity like that that hides all sorts of nasty surprises,” he said, adding that the body should have published redacted versions of every application to the scheme as well as the reasoning for the decisions made on each. This would, in his opinion, allow the public to see which companies want to push data protection limits and why, as well as where the regulator draws the line.

Dr Jennifer Cobbe, a researcher at the Cambridge Trust and Technology Initiative, said that although sandbox initiatives could be a good idea, the ICO should have been more transparent – especially given the inclusion of controversial technology such as facial recognition.

“There’s very little information,” she said. “We might not necessarily need to know the details of the criteria or exactly how each company fulfils those criteria, but some more information about the selection process ... would be useful.”

The ICO’s sandbox is just one of many transparency failings concerning the development of digital systems for use in British public life, the Bureau of Investigative Journalism has discovered.

Across government, from Whitehall to local councils, authorities are failing to share vital details about contracts with private companies providing IT services, despite such systems growing in complexity and importance.

The UK boasts that it is a world leader in open source data, but in an analysis of contracts for bespoke systems listed on Digital Marketplace — a site that connects government departments with tech services suppliers — the Bureau found that two thirds of more than 2,300 closed contracts did not show which company won the tender.

The Bureau’s analysis also found that only about a quarter of 40 public authorities were willing or able to share audit trails for their purchases of off-the-shelf digital products. Others refused the Bureau’s request under Freedom of Information laws on the ground that it would be too expensive to gather the data, even though official guidance says such audit trails should be kept in an easily accessible place.

“It’s disappointing,” said Cobbe. “Generally the government has a significant problem around transparency of its plans for technology.”

She added: “If companies or the government are developing machine learning systems to do certain things, then we have serious risks of biases, of errors in those systems … Without the transparency … we actually have no meaningful way to exercise oversight of them to try to determine and identify any errors.”

Earlier this year Elizabeth Denham, the information commissioner, called for an extension of the UK’s transparency laws. Only public bodies are required to answer requests under the Freedom of Information Act and Environmental Information Regulations, but Denham said this was woefully inadequate when substantial public spending goes to private contractors – suggesting public access should extend to such businesses.

Asked about the lack of transparency around her own office’s sandbox scheme, a spokesperson said: “In order for us, as the regulator, to give effective advice there are times where that advice needs to be given in confidence. This does not mean the legal requirements for compliance are removed – full responsibility for compliance remains with the organisation.

“Each sandbox project was assessed in relation to defined criteria that are freely available on our website along with full details of the development, public consultation process, and how the sandbox will work in practice. We published an analysis of responses to that consultation in which there was overwhelming support for the idea.”

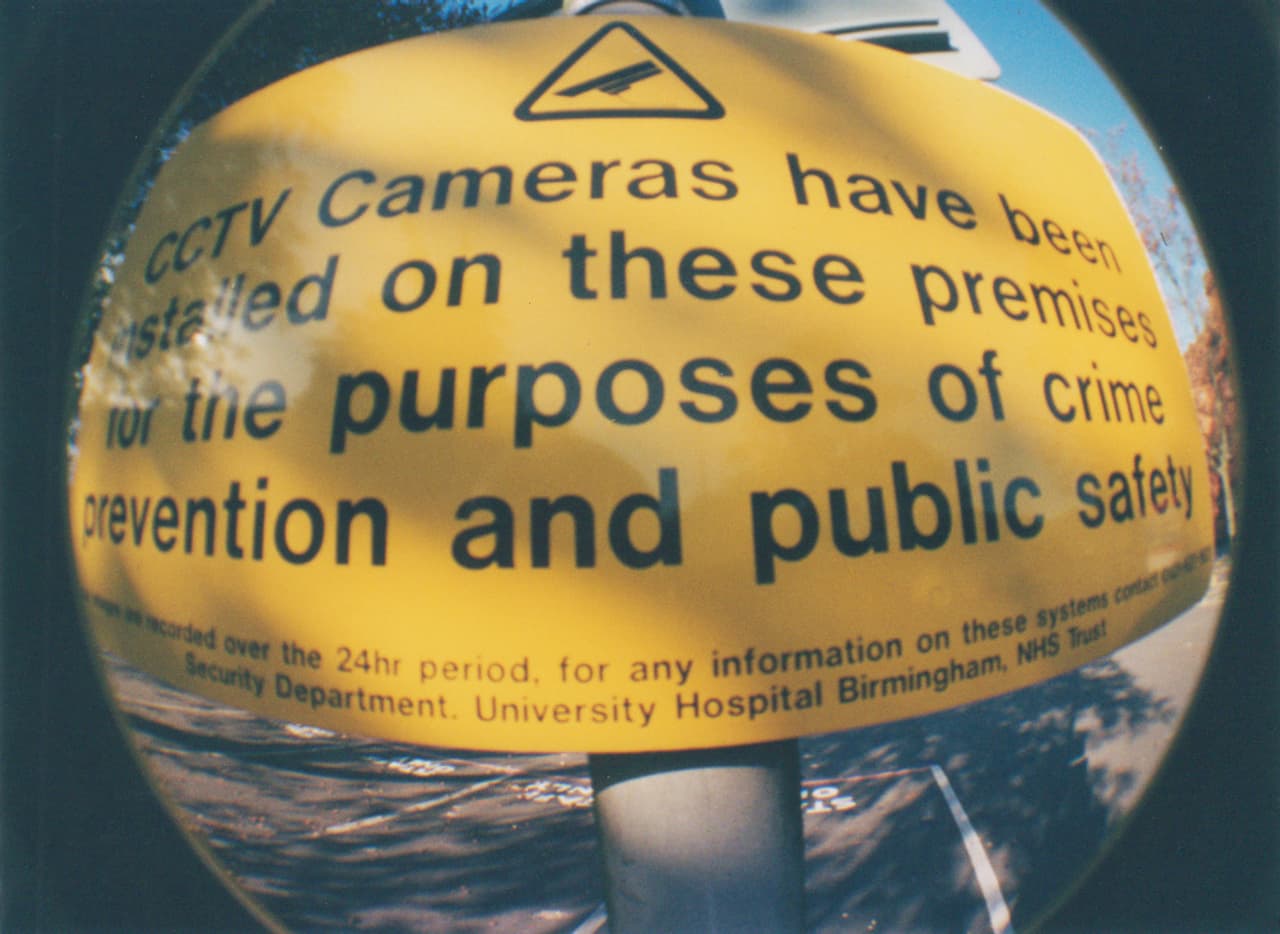

Header image: A sign about CCTV at a hospital in Birmingham. Credit: Richard Clifford/Flickr